Lesson 01: Setting Up SSD for Docker on Jetson

SSD Installation

- Physical Installation:

- Power off the Jetson and remove the peripherals.

- Install the NVMe SSD card on the carrier board and secure it.

- Reconnect peripherals and power up the Jetson.

- Verify the SSD installation with lspci command. The output should look like the following:

0007:01:00.0 Non-Volatile memory controller: Marvell Technology Group Ltd. Device 1322 (rev 02)

- Format SSD and Create a Mount Point

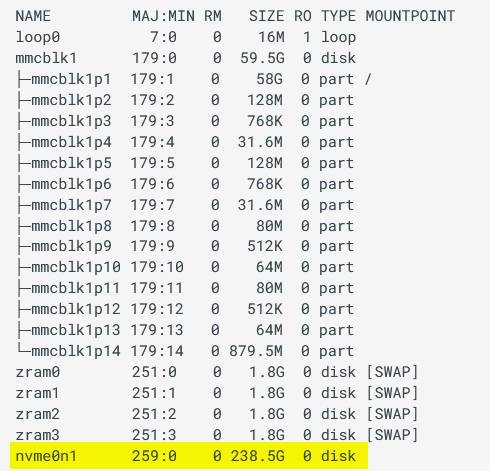

- Find the SSD device name using lsblk command.

The output should look like the following:

In this case, the SSD name is nvme0n1. - Format the SSD:

sudo mkfs.ext4 /dev/nvme0n1 - Create and mount the directory:

sudo mkdir /ssd sudo mount /dev/nvme0n1 /ssd

- Find the SSD device name using lsblk command.

- Auto-Mount after boot

Add the SSD to fstab to ensure it auto-mounts on boot.- First, identify the UUID for your SSD:

lsblk -f - Then, add a new entry to the fstab file:

sudo nano /etc/fstab - Insert the following line, replacing the UUID with the value found from lsblk -f:

UUID=************-****-****-****-******** /ssd/ ext4 defaults 0 2

- First, identify the UUID for your SSD:

- Finally, change the ownership of the /ssd directory.

sudo chown ${USER}:${USER} /ssd

Docker Configuration

- Install Docker:

If you used an NVIDIA-supplied SD card image to flash your SD card, all necessary JetPack components (including nvidia-containers) and Docker are already pre-installed so that step 1 can be skipped.- Update and install necessary packages:

sudo apt update sudo apt install -y nvidia-container curl https://get.docker.com | sh sudo systemctl --now enable docker sudo nvidia-ctk runtime configure --runtime=docker

- Update and install necessary packages:

- Adding User to Docker Group:

As Ubuntu users are not in the docker group by default, they must run docker commands with sudo (the build tools automatically do this when needed). Hence, you could be periodically asked for your sudo password during builds. Instead, you can add your user to the docker group like below:

Restart Docker and add your user to the docker group so you do not need to use the command with sudosudo systemctl restart docker sudo usermod -aG docker $USER newgrp docker - Docker Default Runtime:

If you are building containers, you need to set Docker's default-runtime to nvidia, so that the NVCC compiler and GPU are available during docker build operations. Add "default-runtime": "nvidia" to your /etc/docker/daemon.json configuration file before attempting to build the containers:

Insert the "default-runtime": "nvidia" line as follows:sudo nano /etc/docker/daemon.json

{ "runtimes": { "nvidia": { "path": "nvidia-container-runtime", "runtimeArgs": [] } } , "default-runtime": "nvidia" } - Restart Docker

Then restart the Docker service (or reboot your system before proceeding).

sudo systemctl daemon-reload && sudo systemctl restart docker - You can then confirm the changes by looking under docker info

sudo systemctl restart docker sudo docker info | grep "Default Runtime" Default Runtime: nvidia

Migrate the Docker directory to SSD

- Stop the Docker service:

sudo systemctl stop docker - Move the existing Docker directory to SSD:

sudo du -csh /var/lib/docker/ && \ sudo mkdir /ssd/docker && \ sudo rsync -axPS /var/lib/docker/ /ssd/docker/ && \ sudo du -csh /ssd/docker/ - Edit /etc/docker/daemon.json:

sudo nano /etc/docker/daemon.json

Insert "data-root" line like the following.

{ "runtimes": { "nvidia": { "path": "nvidia-container-runtime", "runtimeArgs": [] } }, "default-runtime": "nvidia", "data-root": "/ssd/docker" } - Rename the old Docker directory and restart the service:

sudo mv /var/lib/docker /var/lib/docker.old - Restart the docker daemon

sudo systemctl daemon-reload && \ sudo systemctl restart docker && \ sudo journalctl -u docker - You can then confirm the changes by looking under docker info

That directory will also now have had it is permissions changed to root-access only by the Docker daemon.$ sudo docker info | grep 'Docker Root Dir' Docker Root Dir: /ssd/docker ... Default Runtime: nvidia

Test Docker on SSD

- [Terminal 1] First, open a terminal to monitor the disk usage while pulling a Docker image.

watch -n1 df - [Terminal 2] Next, open a new terminal and start Docker pull.

docker pull nvcr.io/nvidia/l4t-base:r35.2.1 - [Terminal 1] Observe that the disk usage on /ssd goes up as the container image is downloaded and extracted.

~$ docker image ls REPOSITORY TAG IMAGE ID CREATED SIZE nvcr.io/nvidia/l4t-base r35.2.1 dc07eb476a1d 7 months ago 713MB

Final Verification

Reboot your Jetson, and verify that you observe the following:

sudo blkid | grep nvme

/dev/nvme0n1: UUID="e9ef630c-feb5-4610-ab26-ca2b328b3f66" BLOCK_SIZE="4096" TYPE="ext4"

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mmcblk0p1 116G 25G 87G 23% /

tmpfs 3.8G 172K 3.8G 1% /dev/shm

tmpfs 1.5G 19M 1.5G 2% /run

tmpfs 5.0M 4.0K 5.0M 1% /run/lock

/dev/nvme0n1 916G 752M 869G 1% /ssd

tmpfs 763M 116K 762M 1% /run/user/1000

docker info | grep Root

Docker Root Dir: /ssd/docker

sudo ls -l /ssd/docker/

total 44

drwx--x--x 4 root root 4096 Jul 15 15:32 buildkit

drwx--x--- 2 root root 4096 Jul 15 15:32 containers

-rw------- 1 root root 36 Jul 15 15:32 engine-id

drwx------ 3 root root 4096 Jul 15 15:32 image

drwxr-x--- 3 root root 4096 Jul 15 15:32 network

drwx--x--- 13 root root 4096 Jul 23 00:53 overlay2

drwx------ 4 root root 4096 Jul 15 15:32 plugins

drwx------ 2 root root 4096 Jul 23 00:25 runtimes

drwx------ 2 root root 4096 Jul 15 15:32 swarm

drwx------ 2 root root 4096 Jul 23 00:53 tmp

drwx-----x 2 root root 4096 Jul 23 00:25 volumes

sudo du -chs /ssd/docker/

752M /ssd/docker/

752M total

docker info | grep -e "Runtime" -e "Root"

Runtimes: io.containerd.runc.v2 nvidia runc

Default Runtime: nvidia

Docker Root Dir: /ssd/docker

Your Jetson is now set up with the SSD!